Context Graphs for Regulatory Updates: From Crawled Notices to Agent-First Intelligence

Context Graphs for Regulatory Updates: From Crawled Notices to Agent-First Intelligence

Regulatory monitoring is not a “search problem.” It is a systems problem where new information arrives continuously, changes meaning over time, and must be interpreted in context: which regulator issued it, what rule it amends, which entities it impacts, how it relates to prior guidance, and why it matters to a specific organization.

A context graph is a practical way to represent that living context. Instead of treating each update as an isolated document to retrieve and summarize, a context graph encodes entities, relationships, timelines, and provenance, then allows retrieval and reasoning to be guided by that structure. Graph-based RAG approaches have emerged precisely to improve retrieval and synthesis over large, narrative corpora by adding graph structure as a first-class primitive.

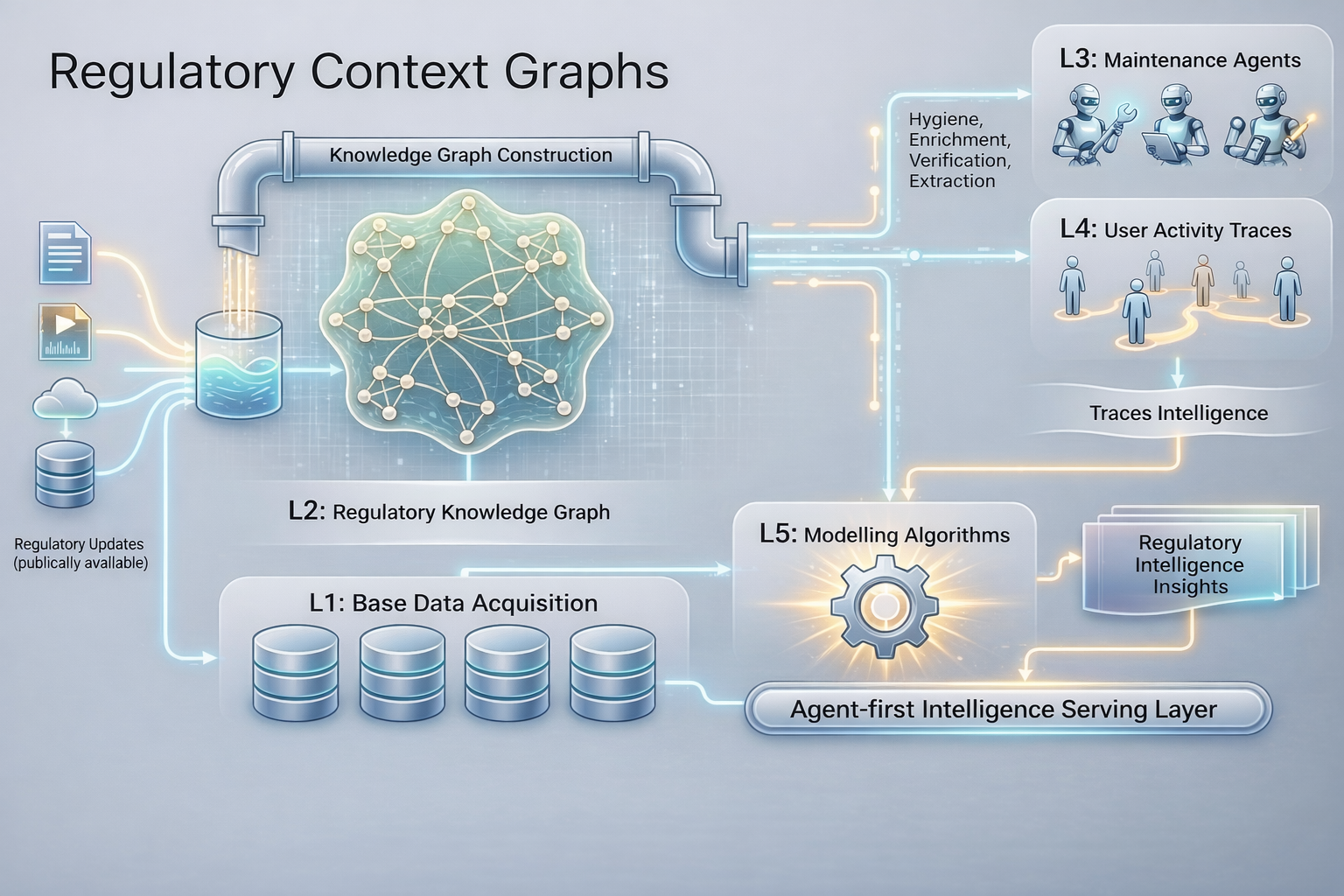

This article lays out a prescriptive, layered architecture for building context graphs for regulatory updates, including acquisition, graph construction, agentic maintenance with human verification, user activity traces, modeling, and agent-first delivery.

What is a context graph in the regulatory domain?

A regulatory context graph is a graph-structured representation of regulatory knowledge where:

- Nodes represent entities such as regulators, statutes, rules, guidance, enforcement actions, obligations, regulated entities, products, jurisdictions, and dates.

- Edges represent relationships such as amends, supersedes, clarifies, enforces, applies_to, references, effective_on, and interprets.

- Provenance is explicit: every claim and edge can be traced back to a source artifact (URL, publication ID, paragraph, version).

- Time is native: edges and node attributes can be valid within time windows to support “as-of” reasoning.

The goal is not to build a perfect “legal ontology.” The goal is to build a system that can accumulate regulatory context, keep it clean as it evolves, and deliver actionable intelligence with defensible evidence trails.

Why a context graph beats “just search + summarize” for regulatory updates

Keyword/semantic search plus summarization is a useful baseline, but it degrades under the exact conditions that define regulatory work:

- Cross-document dependency: regulatory meaning lives in amendments, definitions, exceptions, and incorporations by reference. Search tends to retrieve fragments, not dependency chains.

- Change over time: users routinely need “what changed since last quarter,” “what is currently in force,” and “what was true as of a date.” A graph can model versioning and effective dates directly.

- Entity ambiguity: organizations, products, and legal instruments collide in naming (“Guidance Note,” “Circular,” “Final Rule”). Graph hygiene (entity resolution + canonical IDs) prevents repeated ambiguity.

- Provenance and defensibility: compliance workflows require verifiable links back to authoritative sources; a graph can store claim-level provenance and support audit trails.

- Personal relevance: two teams can read the same update and care about different implications; user traces on top of the graph allow relevance scoring and routing to be learned, not hard-coded.

Graph-guided retrieval and synthesis (often described under GraphRAG patterns) is increasingly used because the graph provides structure for navigation, aggregation, and grounded generation, rather than relying on the model to infer structure on the fly.

Layered architecture for regulatory context graphs

A practical regulatory context graph is best built as a layered system. Each layer has a distinct purpose, clear outputs, and measurable quality metrics.

Layer 1 — Base knowledge acquisition (data ingestion)

This layer exists to ensure coverage and freshness: regulatory notices, speeches, enforcement actions, consultations, FAQs, circulars, and press releases should arrive continuously with minimal latency. It produces a durable, versioned corpus of source artifacts and metadata.

Core responsibilities - Continuous crawling and ingestion across official sources (and optionally trusted secondary aggregators). - Canonical metadata capture: publisher/regulator, jurisdiction, publication timestamp, content type, and stable identifiers. - Snapshotting/versioning: detect edits, replacements, and removals; retain prior versions for audit and “as-of” views.

Key design choices - Treat acquisition as append-only with explicit version links (e.g., supersedes, updated_by) to avoid silent drift. - Capture raw HTML/PDF, extracted text, and normalized markdown to support multiple downstream pipelines.

Layer 2 — Knowledge graph construction (from documents to nodes/edges)

This layer converts a stream of documents into a queryable, evolving graph. Its job is to produce stable entity IDs, typed relationships, and time-aware facts grounded in source evidence.

Core graph primitives - Nodes: regulator, instrument, clause/section, concept/definition, obligation, enforcement action, entity type, jurisdiction, date. - Edges: references, amends, supersedes, clarifies, effective_on, applies_to, enforces, defines, exempts.

Construction pipeline (typical stages) - Parsing + segmentation (document → sections → paragraphs → claims/snippets). - Entity extraction and linking (mentions → canonical entities). - Relationship extraction (explicit citations + inferred relationships, each with confidence and provenance). - Temporal annotation (published date, effective date, compliance deadlines, retroactivity flags).

Practical guidance - Keep a strict separation between facts (with provenance) and interpretations (with model + reviewer attribution). - Design the schema to be extensible; regulatory data evolves faster than ontologies.

Layer 3 — Agents to maintain, clean, enrich the graph (AI-driven + human-in-the-loop)

This layer exists to keep the graph usable over time. Regulatory graphs drift: duplicates appear, names shift, sources change formats, and edge confidence needs recalibration. AI agents provide scale, while human review provides trust.

Agent roles (organized by function)

Ingestion & change-detection agents - Detect source changes (format shifts, moved URLs, republished documents) and automatically update acquisition rules. - Identify duplicate publications and link versions when an “update” is silently republished.

Extraction & normalization agents - Convert raw documents into consistent structure (sections, tables, citations). - Normalize naming (regulators, instruments, jurisdictions) and map them to canonical forms.

Graph hygiene agents - Entity resolution and deduplication (merge/split entities; maintain alias sets). - Edge cleanup (remove stale edges, reconcile conflicting relationships, deprecate broken nodes). - Schema conformance checks (type validation, required fields, forbidden edge types).

Enrichment & linking agents - Expand cross-references (follow citations to referenced instruments and link them into the graph). - Add derived nodes (obligations, themes, impacted areas) with traceable justification. - Build “explanation subgraphs” that connect updates to impacted obligations and prior context.

Verification & audit agents (human-in-the-loop) - Prioritize review queues by risk (high impact, low confidence, high novelty). - Generate reviewer-friendly diffs: “what changed in the graph and why,” with source highlights. - Attach audit artifacts: reviewer decision, rationale, and final confidence.

Human-in-the-loop pattern - Use AI for breadth and triage; use humans for high-impact confirmations. - Treat every human correction as training data for future extraction, resolution, and ranking.

Layer 4 — User activity traces atop the knowledge graph

This layer captures how the graph is used so the system can learn what matters, to whom, and under what conditions. The goal is to convert interaction exhaust into relevance signals without compromising auditability.

What to record - Queries, clicks, traversals, saved views, exports, and “follow” relationships on entities and topics. - Feedback events: “useful/not useful,” escalations, dismissals, and human annotations. - Workflow context: team, role, jurisdiction scope, and internal taxonomy tags (where appropriate).

How traces change the system - Personalization: rank subgraphs differently for legal vs. compliance ops vs. risk. - Routing: push relevant updates to the right owners based on observed behavior and explicit subscriptions. - Continuous evaluation: identify where users repeatedly correct the system and prioritize fixes.

Layer 5 — Modeling layer atop the knowledge graph

This layer turns the graph into predictive and decision-support capabilities. It produces scores, embeddings, classifications, and forecasts that can be attached back onto nodes/edges as attributes.

Common modeling outputs - Relevance scoring: per user/team/entity, combining graph proximity + behavioral signals. - Novelty / change magnitude: quantify how materially a new update alters existing obligation subgraphs. - Risk propagation: spread impact from a regulatory node through obligations to affected products/entities. - Graph + text embeddings: represent entities and subgraphs for semantic retrieval and clustering. - Trend detection: emerging topic clusters by regulator/jurisdiction and early signal surfacing.

Operational principle - Models should write back into the graph as explainable annotations (e.g., “risk_score=0.83 because path X→Y→Z exists and deadlines fall within N days”), not as opaque conclusions.

Sample annotations schema:

{

"id": "string",

"classification": {

"metadata": {

"title": "string",

"url": "string",

"language": ["string"],

"extraction_note": ["string"]

},

"regulatory_source": {

"name": "string",

"division_office": "string",

"other_agency": ["string"]

},

"update_type": "string"

},

"metadata": {

"critical_dates": {

"pub_date_content": "string",

"updated_date": "string",

"effective_date": "string",

"early_adoption_date": "string",

"compliance_date": "string",

"comment_deadline": "string",

"other_dates": [

{

"date": "string",

"description": "string"

}

]

},

"impacted_business": {

"jurisdiction": ["string"],

"industry": ["string"],

"type": ["string"],

"size": ["string"],

"other_notes": ["string"]

},

"impact_summary": {

"objective": "string",

"what_changed": "string",

"why_it_matters": "string",

"key_requirements": ["string"],

"risk_impact": "string"

},

"impacted_functions": ["string"],

"actionables": {

"policy_change": "string",

"process_change": "string",

"reporting_change": "string",

"tech_data_change": "string",

"training_change": "string",

"other_change": "string"

},

"penalties_consequences": ["string"],

"references": {

"past_release": ["string"],

"statutes": ["string"],

"rules": ["string"],

"precedents": ["string"],

"personnel": ["string"],

"other_ref": ["string"]

},

"tags": ["string"]

},

"scores": {

"impact": {

"score": "number",

"label": "string",

"confidence": "number"

},

"urgency": {

"score": "number",

"label": "string",

"confidence": "number"

},

"relevance": {

"score": "number",

"label": "string",

"confidence": "number"

}

}

}

Layer 6 — Access and delivery of intelligence over the graph (agent-first, continuous, triggered)

This layer exists to make intelligence usable in real workflows. It provides interfaces that allow humans, applications, and agents to retrieve and act on graph-backed insights with provenance.

Delivery modes - Pull: interactive Q&A, graph exploration, and “drill-down” from summary → evidence subgraph → source text. - Push: triggered alerts when graph patterns match (e.g., “new amendment to capital requirements in jurisdictions A/B”). - Continuous: rolling digests that adapt as the graph evolves and user attention shifts.

Agent-first consumption - APIs for subgraph retrieval, provenance bundles, and structured “impact packets.” - Bot interfaces that return actions + evidence: suggested owners, deadlines, and linked dependencies. - Agent-to-agent workflows where specialized agents exchange graph-backed artifacts using emerging interoperability patterns (e.g., A2A-style agent communication).

Non-negotiable requirement - Every delivered insight must be accompanied by a defensible evidence trail: nodes/edges used, source documents, and timestamps.

Practical implementation notes

- Start with a narrow schema and grow it deliberately. A small, typed set of nodes and edges with excellent provenance beats an expansive ontology with inconsistent data. Expansion should be driven by repeated product questions that cannot be answered cleanly with existing primitives.

- Make time a first-class concept from day one. Regulatory interpretation often hinges on “effective date,” “transition period,” and “superseded by” chains. Temporal attributes and version edges prevent “current truth” from overwriting historical truth.

- Separate extraction confidence from business confidence. Models can be confident about extraction while the organization still requires human validation for high-impact edges. Store both, and build review workflows around business confidence thresholds.

- Invest early in entity resolution and canonical IDs. Ambiguous naming is a compounding failure mode: it breaks deduplication, relevance ranking, and routing. Canonical IDs plus alias sets are foundational infrastructure, not polish.

- Treat provenance as a product feature, not metadata. Users need to click through from a conclusion to the evidence chain instantly, especially in regulated environments. Provenance should be queryable and packaged alongside every response.

- Design for hybrid retrieval (graph + vector + rules). Pure vector search misses relationship logic; pure graph traversal misses semantic similarity. A hybrid approach can retrieve candidate subgraphs semantically, then constrain and assemble them through typed traversals.

- Build human review into the pipeline, not as an afterthought. Human-in-the-loop should be an explicit layer with queues, prioritization, and audit artifacts. Review workflows are also the highest-quality source of continuous improvement signals.

- Measure quality with graph-native metrics. Track duplication rates, unresolved entities, edge conflict counts, provenance completeness, and “time to correctness” after publication. These metrics correlate more strongly with user trust than generic summarization scores.

- Ship starter graph products early to harden primitives. Products like lineage explorers and impact maps force real schema decisions and surface failure modes in resolution and provenance. They also generate usage traces that bootstrap personalization and routing.

Closing perspective

Context graphs shift regulatory monitoring from “retrieve documents” to “navigate evolving context.” With continuous acquisition, disciplined graph construction, agentic maintenance with human verification, user traces, and a modeling layer, the system can deliver intelligence as explainable subgraphs rather than fragile summaries.

The result is a regulatory knowledge system that scales with volume, improves with use, and supports the defensibility requirements that compliance workflows demand.