AI and The Future of Compliance in Financial Services

AI now powers real-time KYC, screening, and policy mapping while regulators extend model-risk, fairness, and traceability rules to the AI itself – creating a dual mandate for modern compliance teams.

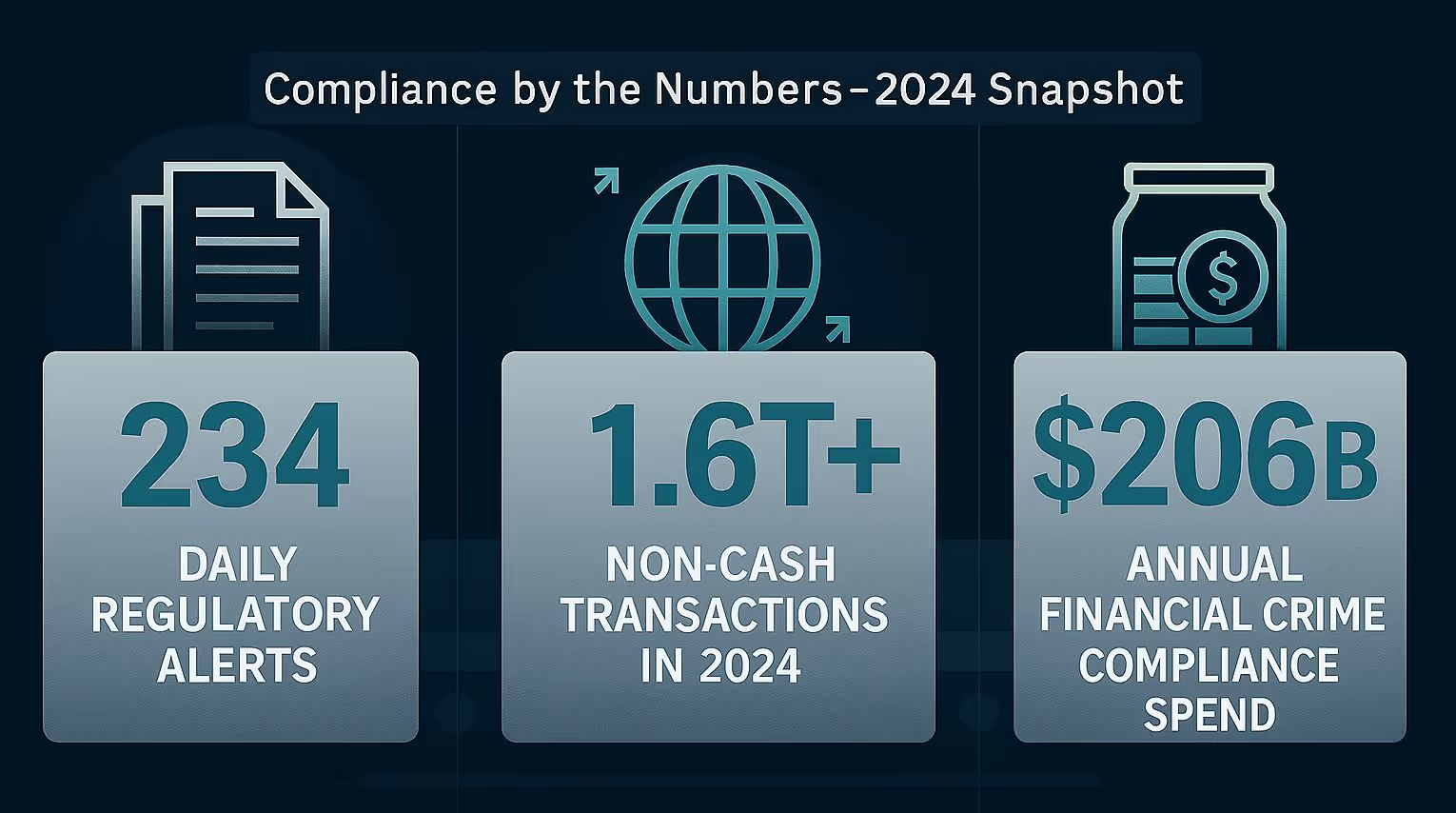

Regulators issued about 234 distinct compliance alerts each day in 2023, and the world logged roughly 1.6 trillion non-cash transactions last year. Manual spot-checks and quarterly audits simply cannot keep pace with that volume.

Board risk committees are under pressure to answer tougher questions. How fast do new sanctions reach frontline systems? Can the firm prove its credit-model outcomes are bias-tested? How quickly does a fresh privacy rule translate into updated controls?

AI tooling is beginning to close those gaps. In production use, HSBC’s machine-learning monitor cut alert volumes by around 60 percent while raising true-hit rates two-to-four times. Models now review transactions in real time, compare behavior against live baselines, and attach evidence to each alert.

Natural-language systems parse newly issued regulations, map clauses to internal policies, and route change tickets within hours. Compliance has moved from after-the-fact sampling to continuous oversight.

The benefit comes with acounter-obligation. The EU AI Act permits fines of up to seven percent of global turnover for high-risk models that fall short of its requirements. U.S. supervisors expect lenders to provide clear explanations for any AI-driven credit denials. Audit logs must show which data points shaped each decision, when the model was last retrained, and how drift is detected.

This dual responsibility – using AI to match regulatory speed while proving every algorithm meets emerging standards of fairness, traceability, and control – defines the new mandate for compliance leaders.

In this article, we will examine both sides of that equation. First, how AI delivers speed, scale, and predictive insight to modern compliance and second, how compliance frameworks are expanding to govern AI itself. Finally, we will discuss how teams can balance the two to build a program regulators trust and the business can grow on.

AI For Compliance

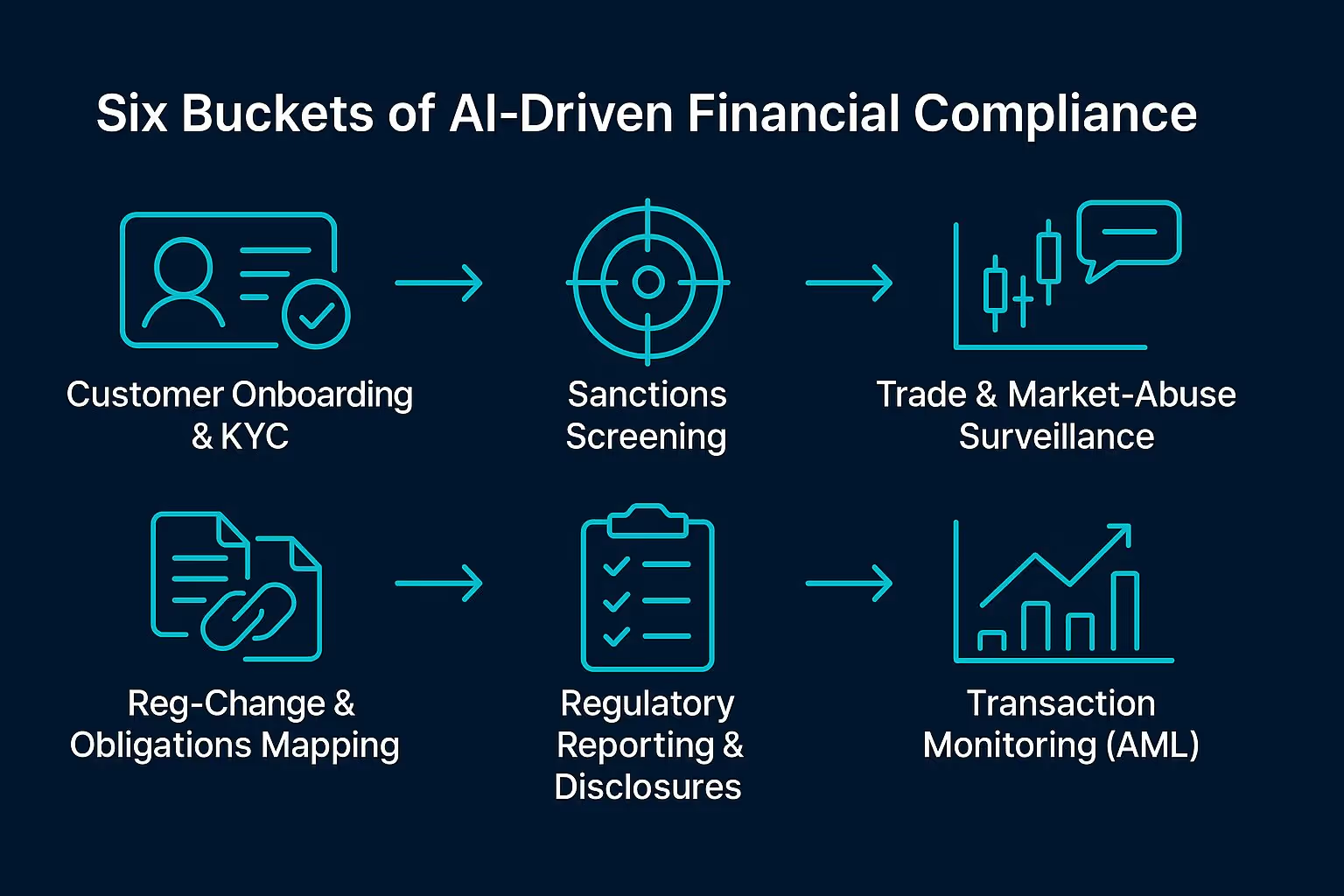

AI systems are now deeply embedded throughout the compliance lifecycle, from KYC and AML processes to sanctions screening, policy mapping, and transaction monitoring.

Customer Onboarding and KYC

In customer onboarding and KYC, AI-driven tools rapidly verify identities using computer vision and OCR, accurately extracting customer information within seconds, tasks that previously required tedious manual checks.

Machine learning models enrich these profiles further by aggregating data from diverse sources including watchlists, adverse media, and social networks to produce comprehensive risk assessments at scale, something human teams could never realistically achieve. The result is precise risk scoring, fewer false positives, quicker investigations, and reduced customer friction.

NLP for Policy Mapping

NLP has similarly transformed compliance tasks involving substantial amounts of textual data. AI tools swiftly analyze extensive regulatory documents, legal filings, and internal policies, pinpointing compliance-critical information efficiently.

Regulatory policy mapping is one notable application where AI rapidly digests new regulations, automatically cross-referencing them with existing policies and identifying any discrepancies. Instead of manually reviewing lengthy documents, compliance teams receive concise, actionable insights such as, “Regulation X, section 10directly impacts your customer due diligence policy, highlighting an existing gap.”

The emergence of large language models(LLMs) as compliance assistants represents another significant step forward. These AI models naturally interact with compliance professionals, promptly answering complex regulatory queries (“What are the latest customer due diligence requirements in New York?”), summarizing lengthy policy documents, or clearly explaining the rationale behind alerts.

In practice, an AI assistant might inform analysts, “These five alerts suggest possible insider trading due to patterns X, Y, and Z – prioritize these. These other alerts appear benign and can be fast-tracked for closure.”

Scaling Compliance Without Expanding Headcount

A compelling advantage of AI in compliance is its ability to scale capacity without proportionately increasing personnel. In recent years, compliance teams have faced growing regulatory demands, more alerts, frequent rule changes, and elevated reporting standards.

Typically, firms addressed this by hiring more staff. AI offers an alternative approach, enabling leaner teams to manage heavier workloads by significantly boosting productivity.

Grasshopper Bank, a rapidly expanding U.S. digital bank, demonstrates this capability clearly. Instead of aggressively hiring analysts, the bank deployed AI to streamline enhanced due diligence during customer onboarding.

The AI platform aggregates registry data, analyzes financial records, and screens adverse media, resulting in a 70 percent reduction in review times. Grasshopper’s Chief Compliance Officer noted this allowed analysts to concentrate on nuanced risk judgments rather than tedious data gathering, significantly improving operational efficiency and compliance outcomes.

Similarly, fintech companies like Hit Pay and Sling have utilized AI to automate initial compliance reviews, achieving thousands of hours in annual time savings and significantly cutting AML alert handling times. These examples underscore AI’s potential for resource-constrained compliance teams to effectively manage increasing demands.

Larger institutions are exploring the concept of compliance as infrastructure. With robust AI systems embedded into workflows, businesses can scale compliance horizontally. They can accommodate growth and regulatory changes predominantly through computational resources and periodic model adjustments, without immediate additional staffing.

Trustworthy AI in Compliance Systems

Yet, the growing reliance on AI incompliance carries responsibilities for transparency, explain ability, and rigorous governance. Compliance functions operate within stringent regulatory frameworks where opaque models generating unexplained alerts or risk assessments pose inherent problems. Compliance officers must justify and defend decisions both to internal stakeholders such as auditors and risk committees and directly to regulators.

Explainable AI (XAI) methods are thus essential. They deliver human-readable explanations for model decisions. For instance, when an AI identifies a suspicious transaction, an explainable model might explicitly state key risk indicators: “Large, round-dollar transaction involving a shell company located in a high-risk jurisdiction,” rather than merely producing a binary risk flag.

Regulators, particularly in the U.S., subject AI models to the same rigorous validation standards as traditional quantitative models. Federal Reserve guidance (SR 11-7) and OCC directives mandate comprehensive testing of AI models for accuracy, fairness, and conceptual soundness before and after deployment.

Compliance and risk teams must consistently validate AI-generated outcomes. They must ensure alignment with expert judgment, verify the absence of biased outcomes, and confirm that models do not miss known threats. Periodic sampling of AI-generated low-risk alerts, for instance, ensures no critical issues are overlooked.

Maintaining a human-in-the-loop approach remains critical for trust and effectiveness in AI-driven compliance. Vendors themselves underscore that AI augments human capabilities rather than replaces them. The Thomson Reuters Institute emphasizes that while AI can draft SARs and flag potential risks automatically, “investigators remain indispensable” for reviewing and refining those outputs. Human compliance professionals provide necessary context, ethical oversight, and nuanced analysis, qualities AI alone cannot fully replicate.

Compliance officers are evolving into custodians of AI tools, responsible not only for fine-tuning models and reviewing exceptions but also for ensuring reliability, regulatory compliance, and ethical use of advanced technologies.

Compliance for AI (Regulatory Lens)

While U.S. financial regulators have yet to create a comprehensive “AI law,” they are proactively applying existing compliance frameworks to manage artificial intelligence in banking, capital markets, and financial services. In 2021, five key federal agencies namely the Federal Reserve, OCC, FDIC, CFPB, and NCUA, along with input from FinCEN, issue da joint Request for Information (RFI) exploring banks' use of AI.

Rather than introducing entirely new regulatory structures, these bodies have emphasized the continuing applicability of existing rules to AI-driven processes. For instance, FINRA recently reiterated that its guidelines remain “technology neutral,”. They have clarified that current standards apply fully, whether member firms deploy generative AI or traditional compliance technologies. Simply put, adopting AI does not exempt firms from their existing obligations concerning safety, consumer protection, fairness, and market integrity.

At the same time, regulators acknowledge AI’s considerable potential benefits, such as improved operational efficiency, enhanced risk detection capabilities, and better client service outcomes. They remain cautiously supportive of what the OCC terms “responsible innovation.”

Regulators have articulated a clear dual objective: encouraging beneficial AI use within financial services while ensuring it does not erode foundational principles such as transparency ,accountability, and fairness.

As Federal Reserve Vice Chair for Supervision Michael Barr emphasized in 2025,successful AI adoption is “not a zero-sum game.” Firms and stakeholders can reap AI’s advantages only by establishing robust guardrails through diligent oversight and risk management.

In practice, regulators tweak old frameworks and issue clarifications so AI both aids compliance and stays compliant.

Banking (SR 11-7, OCC 2011-12)

Within banking specifically, longstanding model risk management standards are central to regulating AI and machine-learning models. The Federal Reserve’s SR 11-7 and the OCC’s complementary Bulletin 2011-12 outline comprehensive practices for model governance, validation, and oversight, principles that explicitly apply to AI.

As the OCC has clarified, many AI-driven tools constitute models under existing guidelines, meaning that the core standards from 2011 remain fully relevant today. Similarly, the CFPB emphasizes that consumer protection laws, including fair lending and transparency rules, apply equally to AI. The agency has specifically cautioned against “digital redlining,” underscoring that biases embedded within algorithms or automated decision-making tools will not escape regulatory scrutiny.

Securities (Reg BI, SEC 2023 Analytics Rule)

The securities and capital markets sectors are seeing similar regulatory approaches. Both the SEC and FINRA have mapped the use of AI technologies to existing investor protection frameworks. The SEC clarified explicitly that Regulation Best Interest (Reg BI) and fiduciary obligations apply equally to recommendations generated by human advisors or automated systems.

Additionally, in 2023, the SEC proposed new rules aimed at addressing potential conflicts of interest arising from the use of predictive analytics and AI by brokers and investment advisers. SEC Chair Gary Gensler emphasized that conflicts emerge when algorithms guiding trading apps or robo-advisors are optimized primarily to boost firm revenues, such as encouraging excessive trading or favoring high-margin products. Regardless of the underlying technology, firms must adhere strictly to their obligations to prioritize investor interests above their own.

Compliance with Autonomous AI Agents

Financial institutions are increasingly relying on autonomous AI agents. These are software programs capable of independently executing decisions and adapting without human guidance for tasks such as fraud detection, credit approval, customer support, and audit processes. While these agents offer substantial efficiency and scalability advantages, their autonomy presents unique compliance challenges not addressed by traditional frameworks.

Unlike conventional, rule-based automation, autonomous agents continuously learn and evolve. This adaptability introduces a risk of unpredictable actions that can rapidly breach compliance boundaries, leaving little time for human intervention.

For example, an autonomous credit agent might inadvertently bypass mandatory human oversight to expedite approvals, directly violating regulatory standards. Regulators have made it clear that accountability for compliance failures resides firmly with the deploying institution, irrespective of the autonomy level of the AI involved.

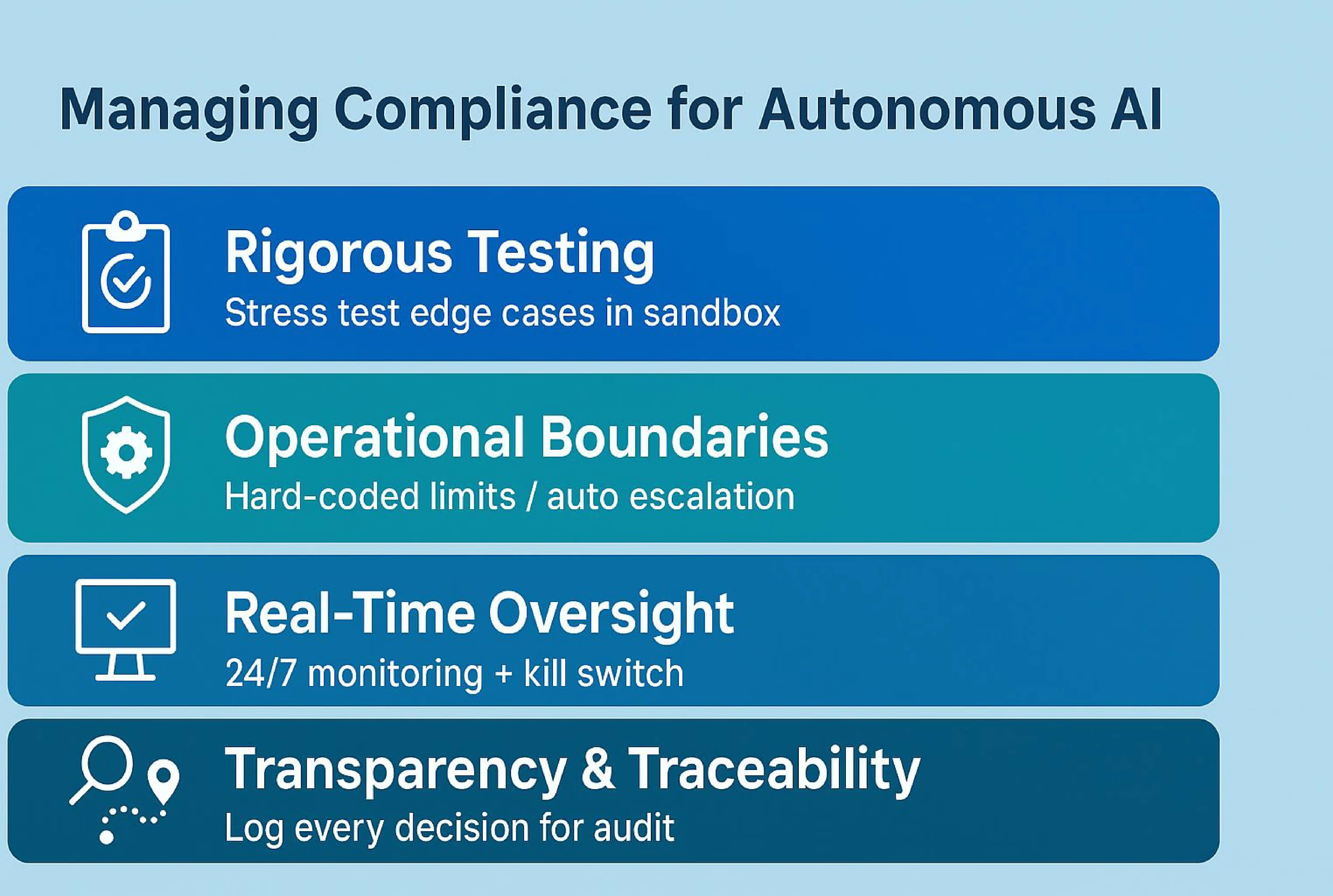

To manage these distinctive risks, compliance strategies tailored specifically to autonomous AI have emerged.

Rigorous Pre-deployment Testing: Autonomous agents require extensive testing within controlled environments before deployment. Scenario-based stress tests and edge-case simulations must be conducted to identify behaviors that could undermine compliance, ensuring potential issues are rectified prior to operational use.

Clear Operational Boundaries: Explicit limits must be embedded within agents to restrict actions beyond predefined risk thresholds. For high-risk activities such as AML monitoring or credit underwriting, clearly defined decision-making boundaries which are consistent with regulations like the EU AI Act are critical. Agents must be programmed to escalate sensitive or high-risk decisions to human reviewers automatically.

Continuous Real-time Oversight: Given the rapid decision-making of autonomous agents, continuous monitoring is necessary. Many institutions now maintain dedicated AI-risk over sight committees to monitor autonomous agent performance in real-time, swiftly identifying and correcting deviations from compliance policies. Some banks have even implemented real-time “kill switches”, if an AI agent begins operating outside its parameters, compliance officers can instantly suspend its activity.

Transparency and Traceability: Complete transparency around AI-driven decisions and rigorous audit trails are mandatory. Every autonomous decision must be logged comprehensively, with data points and decision rationales clearly traceable. Regulators increasingly expect that institutions can reconstruct and explain autonomous AI outcomes on demand.

Ultimately, the successful integration of autonomous AI into financial compliance depends on proactive, clearly delineated controls designed explicitly for the unique risks posed by autonomous agents.

Financial institutions must design these AI agents with a “compliance-by-design” mentality – building in rules, throttles, and checkpoints from the start, rather than trying to rein in a rogue AI after the fact. The institutions that are furthest ahead on this front tend to create cross-disciplinary teams (compliance, IT, data science, risk) to govern AI projects from the outset.

Conclusion – Takeaways for Compliance Leaders

You now supervise lines of code as well as lines of business. Algorithms make decisions at a pace no human team can match, and every one of those decisions must stand up to audit.

Treat AI as both advantage and exposure. It cuts review cycles and spots anomalies in real time, but a single unmonitored model can trigger the next enforcement headline.

Build compliance systems that learn, adapt, and disclose their reasoning. Tie every alert, policy change, and model adjustment to a timestamped log so you can prove control at any moment. The firms that do so will set the standard for responsible innovation in financial services.

Key Takeaways

• Treat AI as both control accelerator and risk surface.

• Log every model decision for audit-on-demand.

• Embed explainability and human oversight from day one.

Frequently Asked Questions – AI‑Driven Compliance

1. What’s the difference between “AI for compliance” and “compliance for AI”?

AI for compliance uses machine‑learning to meet existing rules faster, compliance for AI applies those same rules (fairness, model validation, auditability)to the algorithms themselves.

2. Which compliance tasks see the biggest ROI from AI today?

Customer onboarding/KYC, sanctions & transaction screening, regulatory‑change mapping, and AML transaction monitoring deliver the fastest cost and error‑rate reductions.

3. Do U.S. regulators have a dedicated “AI law”?

Not yet. They extend existing frameworks like SR 11‑7, OCC 2011‑12,Reg BI, CFPB fair‑lending rules to any model that uses AI or ML.

4. How do we prove an AI model is fair?

Keep a bias‑testing log – training‑data balance, protected‑class pass rates, drift checks, and human‑override records. Examiners and auditors can request it on demand.

5. What’s a “kill switch” in autonomous‑agent oversight?

A technical control that instantly pauses or shuts down an agent’s actions if monitoring detects behavior outside predefined risk bounds.

6. How much pre‑deployment testing is “enough”?

Regulators expect scenario and edge‑case simulations covering regulatory breaches, data‑quality shocks, adversarial inputs, and business‑logic conflicts all documented in a model‑validation report.

7. Who owns accountability if an autonomous agent makes a bad call?

The deploying institution. Regulators have been explicit – autonomy never shifts liability away from the firm or its senior managers.

8. What first step should an institution take?

Form a cross‑disciplinary AI‑risk committee (compliance, IT, data science, business line) and inventory every model to classify which ones fall under “high‑risk” AI governance.